The field of Computer Vision reached a historic turning point in April 2023 with Meta’s launch of the Segment Anything Model (SAM). This “zero-shot” foundation model radically transformed image segmentation by allowing any object to be outlined without specific prior training.

After months of experimentation on varied datasets, we have identified its strengths and weaknesses and industrialized its use. Today, our expertise allows us to deploy two mature use cases based on our clients’ strategic needs:

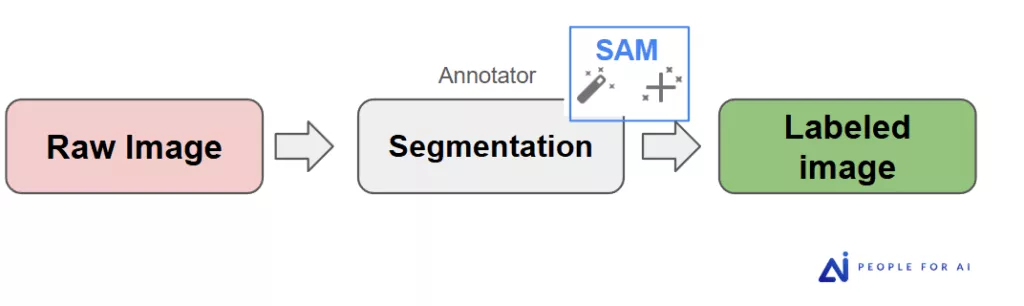

Interactive Assistance: AI at the Service of the Expert Annotator

In this first scenario, SAM is integrated directly as an intelligent assistant within our annotation tools (such as Kili or CVAT). The annotator drives the AI: with a simple point or a bounding box (bbox), they generate a complex polygon almost instantaneously.

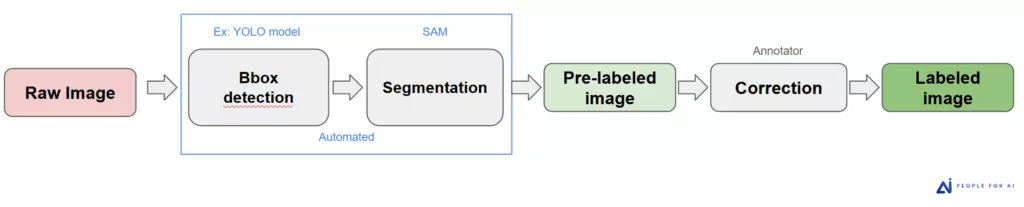

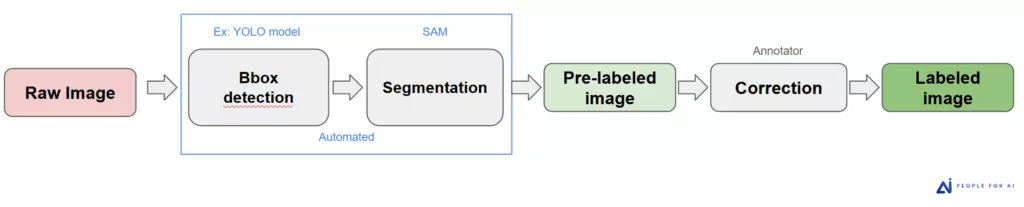

The Automated Pipeline: the power of a bbox detection model + SAM Combo

For massive pre-annotation tasks, SAM functions as a second-stage refiner. It operates downstream from an object detection model (e.g., YOLO), taking the initial bounding boxes and converting them into precise masks at scale.

How it works: If the client already has an oject detection model (or a business case suitable for standard detection), the bounding boxes serve as automatic “prompts” for SAM to generate segmentation masks at scale.

The objective: A drastic reduction in costs and lead times. Our annotators then shift from a “creator” role to that of a “corrector/validator” (Human-in-the-loop), which accelerates production exponentially.

In this article, we will detail when to prioritize one of these approaches and explore the technical limits of SAM that we have identified in the field.

1. In Which Cases is SAM Most Effective?

Although SAM is a “universal” model, its maximum efficiency is expressed on very specific types of data. For our clients, identifying these use cases allows for immediate maximization of productivity gains.

SAM particularly excels in the case of objects with sharp and contrasted contours. As soon as the object stands out visually from its background, SAM produces a near-perfect mask in one click or one bounding box (bbox) depending on the case.

These contrasts can take three forms:

- Chromatic contrast (Color)

- Texture contrast: A rough object on a smooth surface. Even if the colors are similar, SAM detects the break in the pattern.

- Luminance contrast (Light): A well-lit object that stands out from a shadowed area.

In this example, where objects are sharp and contrasted, SAM (used by the annotator in bbox mode) makes no errors:

On this concrete example, using SAM made it possible to finalize the annotation in just 35 seconds, compared to 2 minutes 20 seconds for manual tracing. This shift to AI assistance thus divides production time by 4.

2. In Which Cases Does SAM Meet Its Limits?

While SAM is a revolution, it is not a “miracle” solution. Its efficiency relies on data readability: as soon as the image deviates from sharpness and contrast standards, the model loses reliability. As annotation experts, our role is to identify these risk zones to guarantee the final quality of our clients’ datasets.

Here are the four major cases where SAM encounters its technical limits:

Source Image Quality (Blur and Noise)

The old adage “Garbage in, Garbage out” applies perfectly here. SAM needs exploitable pixels to “understand” a shape. On images from drones or onboard cameras, motion blur causes edges to disappear. SAM then generates imprecise masks that “overflow” or “hollow out” depending on the case. Similarly, high JPEG compression creates artifacts that the AI may erroneously interpret as contours.

On this image, which has good color contrast but poor quality, we notice that the mask contours are not very precise, but the overall result remains very decent and easily improvable by the annotator in a few seconds:

Lack of Contrast

SAM does not understand the object; it detects signal breaks (luminance and color). If the object is “melted” into its environment, the AI no longer sees clearly. Without luminance or color contrast, it cannot properly isolate the shape. In this example, SAM produces an approximate mask of the referee (blurry image and less contrast in certain areas):

In this example, the annotator used SAM in point mode and in bbox mode; we notice a difference in the trace between the two methods, with a better result for the bbox method:

In these cases where SAM proposes a very imperfect mask, it is often faster to redo the mask by hand than to correct the mask generated by SAM. This practice must be discussed with the client beforehand, as it implies that some masks are traced via SAM and others manually. The heterogeneity of the traces can then impact the model (see next chapter).

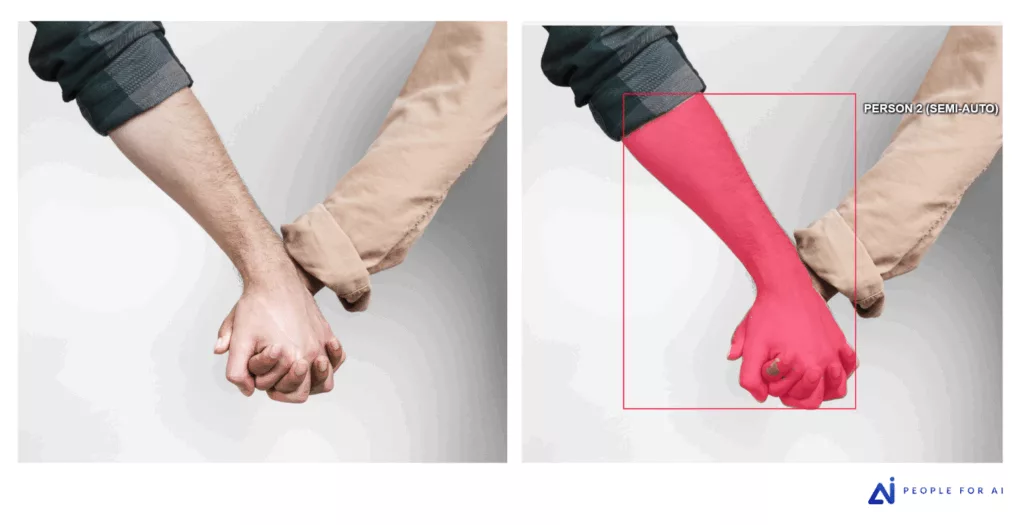

Semantics and Occlusion

SAM identifies shapes, but it lacks contextual logic. In the case of intertwined or cut-off objects, SAM detects visible shapes but does not “understand” the object as a whole, which can lead to this type of errors:

“Pixel-Perfect” Precision on Fine Textures

The model naturally tends to smooth contours to produce aesthetic masks. For very high-fidelity needs (segmentation of hair, textile fibers, or fine vegetation), this smoothing becomes a defect. Human intervention is then the only way to obtain the surgical precision required by certain cutting-edge Deep Learning models. In this example, the serrated contour of the leaf as well as the very fine tip were not included in the mask created by SAM:

3. The Trade-off Between Correction and Manual Tracing: A Matter of Homogeneity

When SAM proposes a very imperfect mask, for all the reasons seen in the previous chapter, it is often faster and more precise to delete the mask and retrace it entirely by hand rather than attempting to correct dozens of failing points. While this approach increases productivity, it raises a major methodological question that we systematically discuss with our clients before launching any project: dataset homogeneity.

- The risk of bias: A mask generated by SAM (very geometric, with a certain smoothing) does not have quite the same visual signature as a mask traced by a human (often more angular).

- The impact on training: If a Deep Learning model is trained on a mix of “AI” and “Human” masks, these variations can introduce bias and affect its generalization capacity.

Depending on the final model’s performance requirements, we define with our clients whether it is preferable to maintain a hybrid flow for speed or to prioritize 100% manual tracing on certain classes of objects, for example, to guarantee perfect data consistency.

4. The Industrial Pipeline: Automation via Object Detection Model + SAM Combo

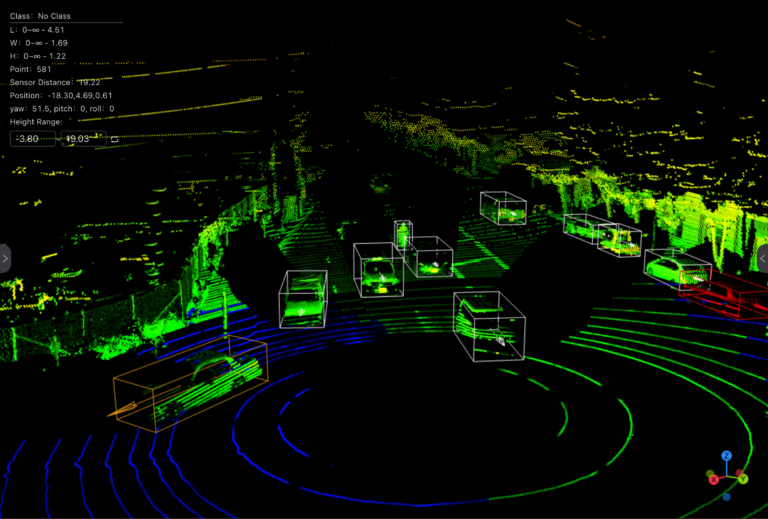

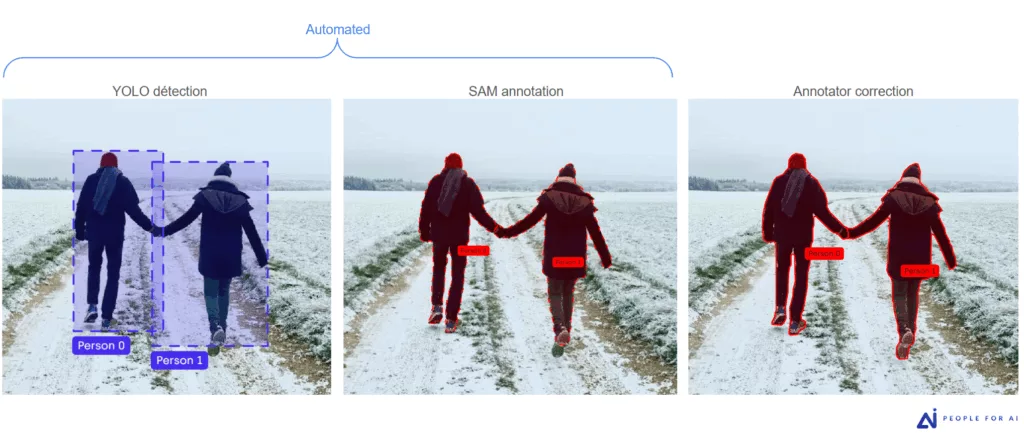

For projects requiring scaling across tens of thousands of images, and when image quality allows, we deploy an automated pre-annotation strategy. This approach relies on a two-stage architecture: business-specific detection followed by AI segmentation.

The Prerequisite: A Suitable Detection Model

The effectiveness of this pipeline depends on the project’s maturity. For SAM to generate masks automatically, it must receive a “prompt” (an instruction). Here, we use an object detection model like YOLO (You Only Look Once):

- Case A: The client already possesses an object detection model trained on their business data.

- Case B: The objects are standard enough to be detected by a high-performing pre-trained model like YOLO.

How the Pipeline Functions

We have designed a pipeline that maximizes processing speed without compromising rigor. The process takes place in three key steps:

- Automatic Bbox Detection: Our developers deploy the object detection model across the entire raw dataset. This model scans each image to identify objects and generate bounding boxes.

- Automated Mask Generation: Our developers run SAM on the generated bboxes. Using each box as a “prompt” (reference guide), SAM instantly calculates the most probable segmentation mask for the detected object.

- Expert Review (Human-in-the-loop): Once this pre-processing is complete, we submit these pre-annotated images to our annotation teams. Their role changes in nature: they are no longer there to create the trace from scratch, but to act as controllers and validators. They correct any overflows, adjust contours in complex areas, or merge segments if necessary.

A Massive Productivity Gain

While SAM in assistant mode already reduces workload (as seen in Chapter 1), its integration into a pre-annotated pipeline via YOLO goes even further: on a project for one of our clients, the annotation time per mask was halved compared to the classic assisted mode.

5. A Major Issue: Data Confidentiality and Security (GDPR)

The use of SAM raises a critical question for sensitive projects (medical, defense, personally identifiable data): where is the image processed? For Meta’s algorithm to segment an image, it must mandatory be “loaded” onto a server equipped with the necessary computing power (GPU).

The limits of “zero transfer”: Using SAM requires a host infrastructure; it cannot function in isolation. When security policies strictly prohibit third-party or cloud hosting, deployment becomes complex. While a local installation (e.g., open-source CVAT) is an alternative, it increases technical overhead. Furthermore, accessing the tool via a virtual machine—often necessary to prevent data downloads—can create latency that negates the productivity gains SAM was intended to provide.

Before starting any project, we verify whether data transfer to our annotation tool servers is permitted. If data must remain strictly confined within the client’s systems, manual annotation via secure remote access becomes the preferred scenario.

Conclusion: AI for Speed, Humans for Reliability

The integration of Segment Anything (SAM) into our annotation processes marks a major turning point: by dividing production time significantly, this technology allows us to meet scaling challenges with unprecedented responsiveness.

However, AI does not replace discernment. Whether it is overcoming a lack of contrast, managing complex occlusions, or guaranteeing perfect dataset homogeneity, the expertise of annotators remains essential.

Adopting SAM means choosing power, but calling on People For AI ensures that this power is channeled by rigorous human methodology and secure data management.

Ready to accelerate your Computer Vision projects? Contact us for a custom dataset assessment and let’s define the most effective pipeline for your quality requirements.