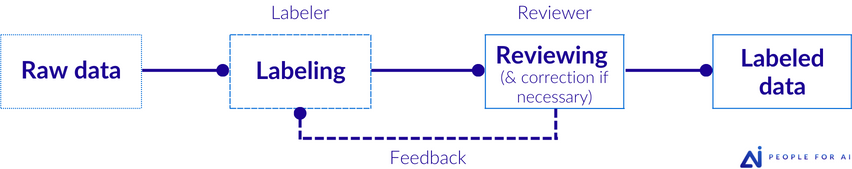

Reviewing (from doing the review) is a process that ensures a higher quality of the annotated batches. In the context of validation workflows, reviewing and the absence of validation represent the two most common workflows (out of the four validation workflows types) that enable evaluating the accuracy of an annotated data.

This process is commonly chosen for its cost-effectiveness. After the initial labeling, a review and correction phase takes place, providing valuable feedback to the annotator. In this case, each piece of data is annotated by a single labeler. A reviewer will assess and validate part or the entire previously labeled dataset.

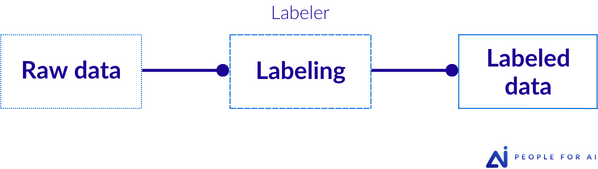

A process without reviewing is another potential workflow with no additional validation of the label other than the annotator’s own assessment.

However, in the majority of projects, it is an adaptive workflow that will bring maximum effectiveness. At the beginning of the project, a robust review process takes place. As labelers improve their performance through feedback from the reviewing process, the proportion of reviewing in the workflow gradually decreases.

Evidently, reviewers must undergo more extensive training on complex cases and must be more experienced than labelers to effectively review. For instance, a reviewer normally is an expert/senior labeler or a project manager. Therefore, they possess a deeper understanding of your specific challenges and requirements, thus being able to provide a more comprehensive and insightful review.

Factors that influence the duration of the review

To maximize its efficiency, the review percentage and the time passed reviewing will depend on many factors such as:

- Task complexity: More complex tasks require special attention from reviewers to facilitate the annotator training

- Annotators’ expertise: Depending on the lablers’ position on the learning curve, more or less review may be necessary

- Reviewers’ expertise

- Clarity of instructions: The clearer the instructions are, the less review will be needed to identify specific edge cases to be resolved (or to be resolved by the client)

- Expected error rate by the client: The lower the requested error rate, the more the review is necessary to achieve that error rate

We should keep in mind that a 100% review percentage does not guarantee the quality of the annotated batch.

Synonyms : QA, Quality Assurance (cf. Superannotate)

Same family words : Reviewing, Reviewer.