AI-Powered Video Moderation for a Global Social Platform

Must-read Case Studies

... many others case studies that are confidential!

Our client aspires to become a worldwide standard for creative expression, cultural discovery and interactive entertainment. By bringing together creators and users from all horizons, our client aims to build a space where everyone can tell their story, share their talents and connect authentically with a global audience.

To keep its content platform as inclusive as possible, it needs to moderate the content shared by creators.

Context

CONTEXT

By giving a voice to millions of creators, our customer is shaping the digital culture of tomorrow.

Moderation on these social platforms is essential to ensure that the content remains user-friendly.

On average, 100 hours of video are posted every minute on our client’s platform, according to SendShort. That’s a lot of videos to moderate. That’s why our customer chose to get help from artificial intelligence models, based on image, video and text.

To train his models, he needed a large number of precisely annotated videos.

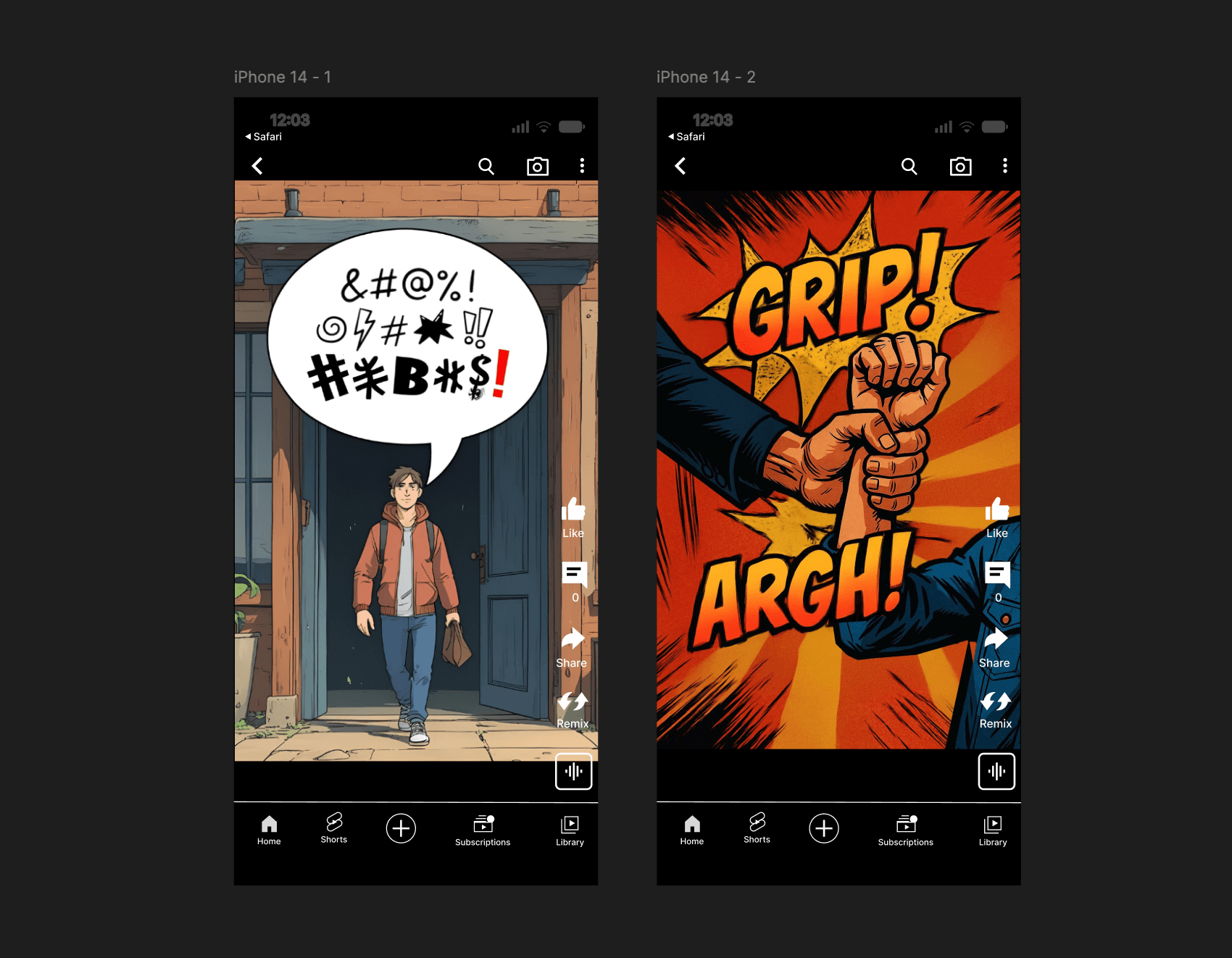

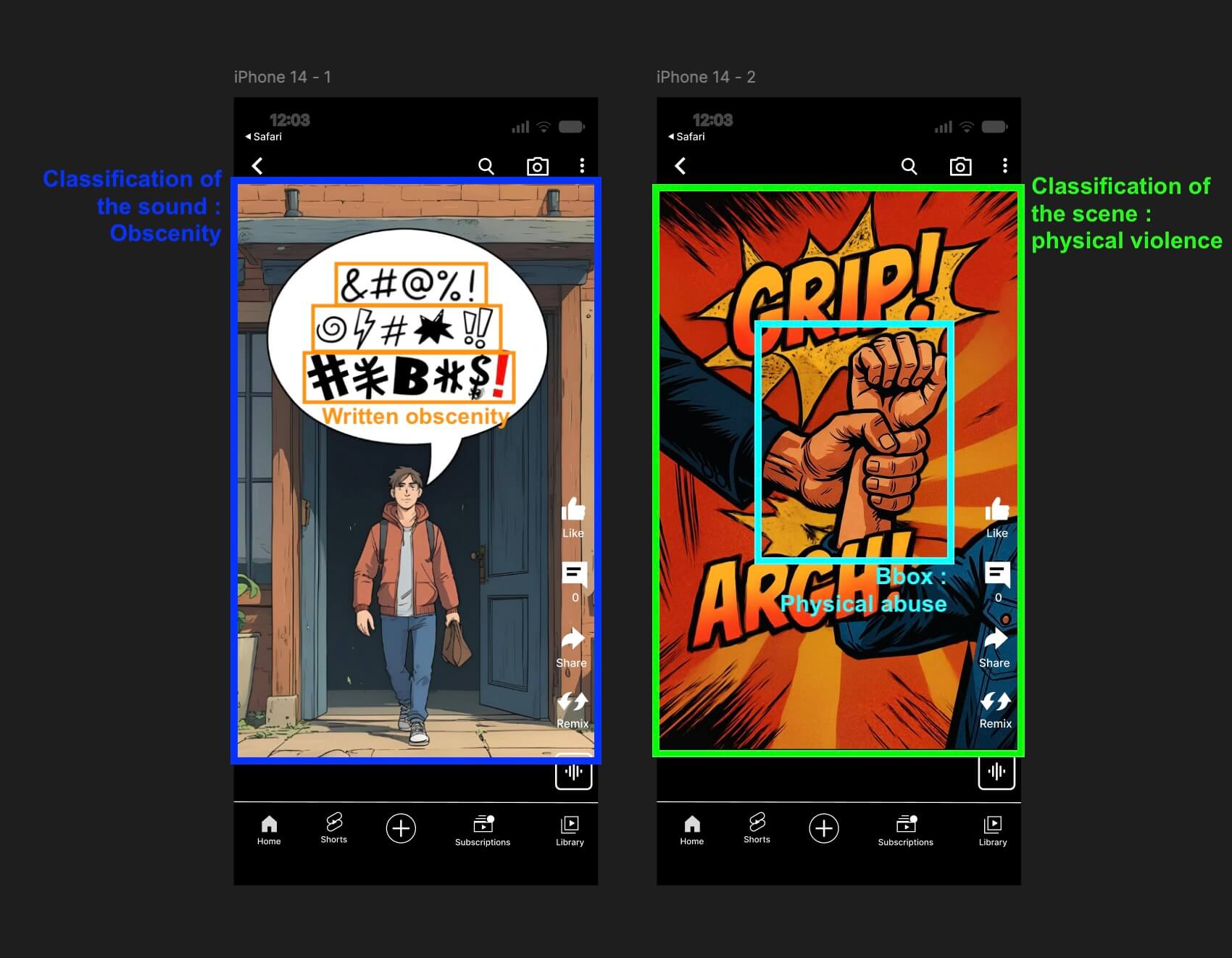

The annotation task consisted in moderating the videos provided by the customer.

To carry out this annotation project, the customer requested the assistance of People for AI

OUR SOLUTION

OUR SOLUTION

We began by refining the annotation guide in collaboration with the customer, in order to clarify the many concepts to be moderated. The aim was to precisely define the boundaries between what needed to be moderated and what could be kept intact, taking into account grey areas and possible interpretations.

An in-depth learning process for the different classes was also carried out. The project was even more complexed by the fact that the classes were not mutually exclusive: the same video could contain elements of nudity, racism or even calls for violence.

Throughout the project, we maintained active communication with the client, which enabled us to adapt quickly to any adjustments in instructions.

Finally, our team easily adopted the tool provided by the customer, ensuring rapid integration and annotation directly on their environment.

Our solution in figures:

A team of nearly 15 full-time staff, including two project managers.

Each annotator received over 24 hours of training.

11 classes at the beginning, which subsequently had to be detailed and described more precisely in sub-classes.

Matthieu Warnier

Data Labeling Director @ People for AI

« This was a particularly demanding project, with a client with high expectations. The complexity of moderation lies above all in the training of the annotators, who must demonstrate a general culture and be able to discern the sometimes subtle boundary between what must be moderated and what can remain published.

The whole team worked with rigor and determination to meet the customer’s requirements. Fortunately, the overflowing creativity of the content creators made the project stimulating: our annotators and reviewers never got bored. »

OUR IMPACT

OUR IMPACT

Nearly 2000 videos processed per day

Over 1,000 obscene phrases were found, enabling the creation of a dictionary and automatic detection of obscenities.

Our customer improves automatic detection of videos to be moderated, saving thousands of man-hours per week.

An anecdote about the project:

As the customer was in a hurry to re-train his model, we had to expand our team twice in order to meet the deadline. Fortunately, its algorithm was already filtering out the most sensitive content, making the project particularly enjoyable for our annotators. Several team members even asked to be given priority for this assignment!

Who would have thought that analyzing videos from social networks could be so motivating… and so profitable?

Head of AI of our client

Client of People For AI since 2023

« We worked with the PFAI team on a particularly complex annotation project involving multiple types of data (images, videos, texts, sound). Their ability to manage the project efficiently, adapt quickly to changes, and maintain smooth communication throughout the process was key. They successfully mobilized the necessary resources within tight timelines while consistently delivering high-quality results.

Thanks to the thousands of annotated videos, our customer has been able to more easily moderate the thousands of hours of videos posted every day on its platform.

This moderation helps ensure a safe, regulatory-compliant environment, while reinforcing content quality and user confidence.

They trust us

Our labeled data will exceed your expectations.

We provide training data. We do not provide your data to anyone else and we will not subscribe you to any newsletter without your consent.