If I were to tell you that the performance of Machine Learning algorithms depends on the training data quality, you would say that you are not learning anything new about data labeling. There is even a proverbial phrase about this:

Garbage in, garbage out.

Yet, we don’t hear much about the companies involved in creating labeled data sets, as this is a relatively new field.

As Data labeling is still little known to people, many misconceptions remain. This does not facilitate communication between companies developing Machine Learning algorithms and companies labeling data.

So to help you in your data labeling project, here are 3 common preconceptions to avoid!

I – Data Labeling is always easy

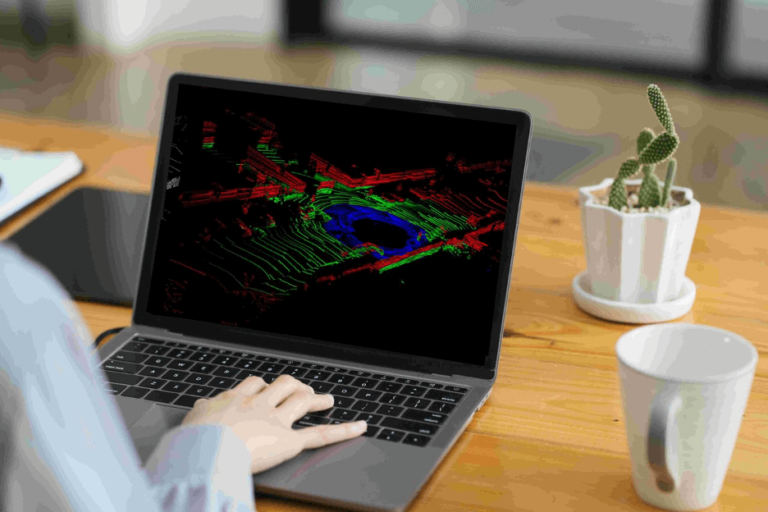

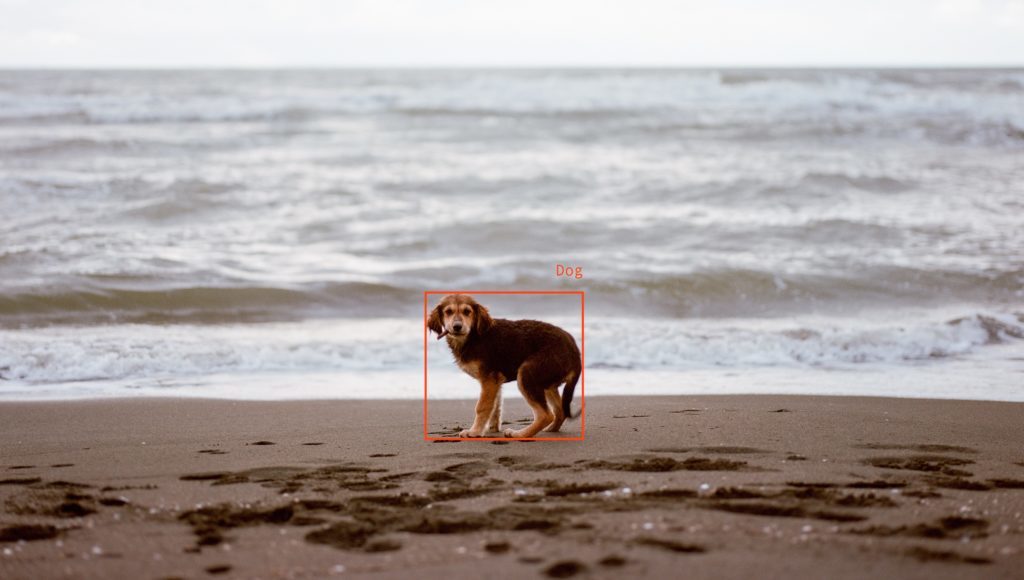

Not really. While some projects are trivial (e.g. labeling bounding boxes around people’s faces), Machine Learning algorithms detect increasingly complex events. This requires better-trained labellers, better management, tailor-made labeling processes and adapted labeling tools. All this efforts will reduce errors and increase labeling speed.

Even on seemingly trivial projects, we often deal with some of the following challenges:

About clients’ requirements:

- Understanding precisely what needs to be labeled, managing edge-cases and concept drift monitoring. [Concept drift occurs when the definitions of labeled objects slowly diverge]

- Understanding client expected outputs (formatting the output data).

Labeling tool’s adaptation:

- Adding pre-annotations on the interface of labelers to accelerate labeling

- Implementing Active Learning (the fact that the AI model gives you only images when the AI model is not sure), interpolations (between frames of a video for example) or AI models to label faster.

We could introduce to you our preferred partner Kili Technology that helps data scientists to leverage these features into their data labeling platform.

Assessing and improving the quality:

- Defining well adapted quality metrics, monitoring them and improving labels if necessary;

- Automatic error detection based on geometric calculations or logical rules.

On a frequent basis, your project will also encounter specific challenges that we will help resolve.

———-

Last but not least, the complexity will increase with the number of classes and rules in the instructions.

II – Data labeling is always fast

All the previous challenges take time for the labeling expert. It also sometimes requires some IT development time for tool improvement.

Refining the instructions and training the labelers requires interaction between the labeling company and the client which takes additional time.

Moreover, the more complex, lengthy and time-consuming your data labeling project is, the more advantageous it will be to start with a phase of clarifications, tests and adjustments before scaling up your project.

Let’s see why:

The Quality/Speed balance’s adjustment

Setting the appropriate quality level requires interaction between client and labeling company. Quality KPI may be sometimes helpful but not mandatory.

Instructions will evolve

More data leads to more questions and edge-cases. It’s difficult to have good instructions right from the start. Clarifying the instructions as soon as possible will avoid concept drift during the project.

Labeling tool’s adaptation and validation

Labellers and reviewers will be faster if the labeling interface is well adapted to the task. Adhoc automatic error detections can be added to the tool to increase quality.

The tool must also include quality and team features : reviewing process, team management, client view, Q/A, etc…

It takes some time to build a proficient team

It’s beneficial to start by training a single task expert. Training is much easier once the instructions and the tool are finalized. A clear organization for quality assurance needs to be implemented.

———-

This definition phase may take time but again it will help you in the long run to scale your labeling project. Moreover, you will learn a lot about your own labeling project which is precious for you and for our labellers as well.

III – Data labeling is always cheap

We have seen above that data labeling is not always easy and it is not always fast, consequently data labeling cannot always be cheap.

If you only look at the hourly rate of a freelance labeler on the other side of the world, labeling can be very cheap (e.g. 2$ per hour of labeling).

However, if your project is big and non-trivial it requires a large and organized team with a complex annotation process (training, consensus, review, etc.). This implies that you will need dedicated managers, labeling experts and precise labeling tools that have a team management feature.

Independent freelancers cannot label large and non-trivial projects because the proportion of imprecisions/errors in your training dataset might be important. You may try to compensate for the low-quality training dataset with hours of fine-tuning and parameterization of your ML model. It can create heavy delays. However, refining your ML models may not compensate heavy inaccuracies. Then the only remaining solution to keep your project alive is to label your dataset again.

Final thoughts on data labeling

If you outsource this task to a data labeling company, your project will have a much higher probability of success. It will be more likely that you will get timeliness with the expected accuracy and bring the desired added value to your business.

Andrew Ng recently explained that while Machine Learning engineers focus a lot on their algorithms and their accuracy, the most significant ROI today is in increasing the quality of the training data sets.

High performance AI = Good model + Good data

Andrew Ng

To conclude, quality, price and speed are parameters that only a specialized company in data labeling will be able to adjust for your project’s needs. Facing the explosion of the complexity of AI projects, expertise in data labeling is now necessary to clearly understand your needs and respond to them in the best possible way.

Join our team and work with us

We are continuously looking for talents to grow our team. Apply with a CV and an email to our open positions.